The data landscape has changed considerably since Kimball first proposed the dimensional approach to building a data warehouse schema. Over the last 20 years or so most data warehouses were built on the premise of federating data from operational systems into some kind of operational data store(s) and loading into a relational database built around star schemas. Well along comes this notion of the “data lake”.

The term “data lake” is an attempt to describe any large data pool in which the schema and data requirements are not defined until the data is queried. Uh oh, now what do we do there is bunch of unstructured data creeping into my enterprise things like click stream data, IoT data, etc.

Many organizations have gone to storing this data in technologies like Hadoop and JSON. So our “data lake” has turned into a “data tsunami”.

According to Gartner:

“… data warehousing has reached the most significant tipping point since its inception. The biggest, possibly most elaborate data management system in IT is changing. “

– Gartner, “The State of Data Warehousing in 2012”

In today’s blog we will talk about the features that have been added to SQL Server in the last couple of releases that allow us to extend the old data warehouse paradigm to encompass the “data tsunami”.

“Old School” Data Warehouse

Business reporting is one of the primary reasons that the data warehouse exists. Without a data warehouse someone in the organization, maybe even you, is going to ten different systems, grabbing different data exports and metrics, and consolidating that data on some giant Excel spreadsheet.

This led to the establishment of ETL (Extract, Transform, and Load) for consolidation of data from multiple systems before loading into the data warehouse.

Companies frequently spend tons of time and cash on ETL tools and database vendors (and sometimes consultants) to get a data warehouse built. I worked on a project for over 2 years working on establishing an enterprise data warehouse, bringing together data from 13 legacy systems.

The project cost millions and, to be honest, I am not sure if they ever got it fully implemented. After 2 years the project is out of data and incomplete. Not to mention its missing data from other sources that has become relevant in that time, such as:

- Non-relational data

- IoT, Device

- Social Media

- Web traffic

The “Cool Kid’s” Data Warehouse

The traditional data warehouse has served us well for many years, but new trends are causing it to break in four different ways:

- data growth

- fast query expectations from users

- non-relational/unstructured data

- cloud-born data

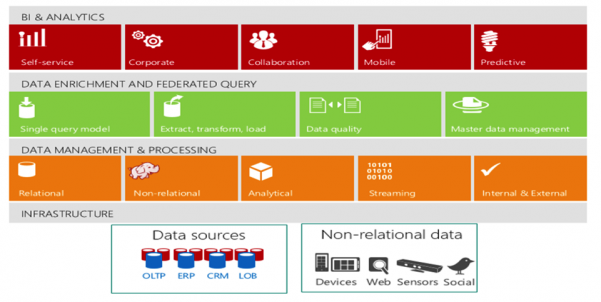

The “Modern” data warehouse handles relational data as well as unstructured data in Hadoop for example, while providing a way to easily interface with all these types of data through one query model. It can handle “big data” while providing very fast queries. Microsoft’s vision of the “modern” data warehouse looks like this:

Multiple big data structures create multiple platform options for storing data. The data warehouse becomes spread across multiple platforms as a result. This is because no one platform will run query and analysis workloads efficiently across all data. Diverse data will be loaded onto the platform based on storage, processing and budget requirements.

While a multi-platform approach adds more complexity to the data warehouse environment, BI/DW professionals have always managed complex technology stacks successfully, and end-users love the high performance and solid information outcomes they get from workload-tuned platforms.

An integrated RDBMS/HDFS (Hadoop) combo is an emerging architecture for the modern data warehouse. For example, an emerging best practice among data warehouse professionals with Hadoop experience is to manage non-relational data in HDFS (i.e. creating a “data lake“) but process it and move the results (via queries, ELT, or PolyBase) to RDBMSs (elsewhere in the data warehouse architecture) that are more conducive to SQL-based analytics. So HDFS serves as a massive data staging area for the data warehouse.

The secret sauce that unifies the RDBMS/HDFS architecture is a single query model which enables distributed queries based on standard SQL to simultaneously access data in the warehouse.

Microsoft SQL Server to the Rescue

Microsoft has introduced technologies in the release of SQL Server 2014 and SQL Server 2016 that make the “Data Warehouse of the Future” a reality. These technologies include:

- PolyBase (2016)

- JSON support (2016)

- Temporal Tables (2016)

- In Memory Tables (2014)

- ColumnStore Index (2014)

The advent of these technologies has allowed us to expand the reach of the data warehouse. Let’ take a look at these technologies.

PolyBase

PolyBase uses T-SQL statements to access data stored in HDFS or Azure Blob Storage. It was initially available in Parallel Data Warehouse (PDW). Originally SQOOP was used to access data in HDFS through SQL commands, but in that architecture the data was actually moved from the Hadoop cluster into SQL Server for querying. Performance was considerably compromised.

With PolyBase however, the data is queried in place. SQL queries are converted into MapReduce functions that run natively on the cluster. Based on statistics and corresponding costs, SQL Server decides when to generate map jobs on the fly, to be executed within Hadoop. This is also transparent to the actual end user or application.

It is possible to integrate data from two completely different file systems, providing freedom to store the data in either place. With PolyBase all of the SQL knowledge accumulated by millions of people becomes a useful tool which provides the ability to retrieve valuable information from Hadoop with SQL.

Key Benefits:

- Build the data lake w/o heavily investing in new resources, i.e. Java & map/reduce experts

- Leverage familiar & mature T-SQL scripts and constructs

- Seamless tool integration w/ PolyBase

JSON Support

Javascript Object Notation (JSON) is a fancy name for a simple idea: data stored as javascript variables. One of the reasons why NoSQL systems become popular is the fact that you can use composite objects where you can store attributes of primary entities with related records within the primary entity as an array or collection of sub-objects. As an example, in MongoDb or DocumentDb you would create one JSON document for Product and add related reviews as an array of JSON objects. Here is a sample JSON document.

{

“ProductID”:709,

“Name”:”Mountain Bike Socks, M”,

“Color”:”White”,

“Reviews”:[

{

“Reviewer”:{

“Name”:”John Smith”,

“Email”:”john@fourthcoffee.com”

},

“ReviewDate”:”2007-10-20T00:00:00″,

“Rating”:5,

“ModifiedDate”:”2007-10-20T00:00:00″

}

]

}

While relational databases have lots of use cases, there are areas where different technologies are a much better fit. One of them is flexible and complex real-time searching. Elasticsearch is one such search mechanism often used with JSON documents. With JSON support you can output data from SQL Server to ElasticSearch.

Temporal Tables

A temporal table can be defined as a table for which PERIOD definition exists comprising of system columns. These columns are available with data-type of datetme2 where the period of validity is recorded by the system.

Temporal tables are really two tables (data table, historical table). One table contains the current values while another handles the historic versions of the data. In the history table the previous versions of all records are recorded. So the most significant function of Temporal Table is that it allows storing data in a table at any point in time.

To query data in the history table, you must use temporal/time based queries. The SELECT statement FROM <table> clause has a new clause FOR SYSTEM_TIME with four temporal-specific sub-clauses to query data across the current and history tables:

- Point in time: AS OF <date_time>

- Exclusive bounds: FROM <start_date_time> TO <end_date_time>

- Inclusive lower bound, exclusive upper bound: BETWEEN <start_date_time> AND <end_date_time>

- Inclusive bounds: CONTAINED IN (<start_date_time> , <end_date_time>)

An example query:

SELECT DepartmentNumber,

DepartmentName,

ManagerID,

ParentDepartmentNumber

FROM DEPARTMENT

FOR SYSTEM_TIME AS OF ‘2014-01-01’

WHERE ManagerID = 5

Good utilization of Temporal Tables include:

- Slowly Changing Dimensions

o Data Table is Type 1

o Historical Table is Type 2

- Recover accidental data changes

In-Memory Tables

In-Memory Tables are held in memory at all times. Because it is held in memory and a columnstore index can be defined on the table, there are advantages to using these tables for data staging for data warehouse loads.

This is especially true for data loads from data being loaded from non-relational database structures (flat files, spreadsheets, CSV, etc.). With large data loads, not having to read from disk speeds up processing of ETL significantly

Columnstore Index

A columnstore is data that is logically organized as a table with rows and columns, and physically stored in a column-wise data format. A rowstore is data that is logically organized as a table with rows and columns, and then physically stored in a row-wise data format. A clustered columnstore index is the physical storage for the entire table.

Columnstore indexes increase data warehouse performance by:

- Storing similar values together in a column which results in high compression rates. This minimizes or eliminates IO bottleneck in your system while reducing the memory footprint significantly.

- High compression rates improve query performance by using a smaller in-memory footprint. In turn, query performance can improve because SQL Server can perform more query and data operations in-memory.

- Batch execution improves query performance, typically 2-4x, by processing multiple rows together.

- Queries often select only a few columns from a table, which reduces total I/O from the physical media.